Crawler

What is Crawler? – Definition

Crawler (also known as spider or spiderbot) is an Internet bot that is used by search engines. Its function is to collect information about the web architecture and its content in order to index it and, if valuable, display it to users in the search results.

What are Web Crawlers for?

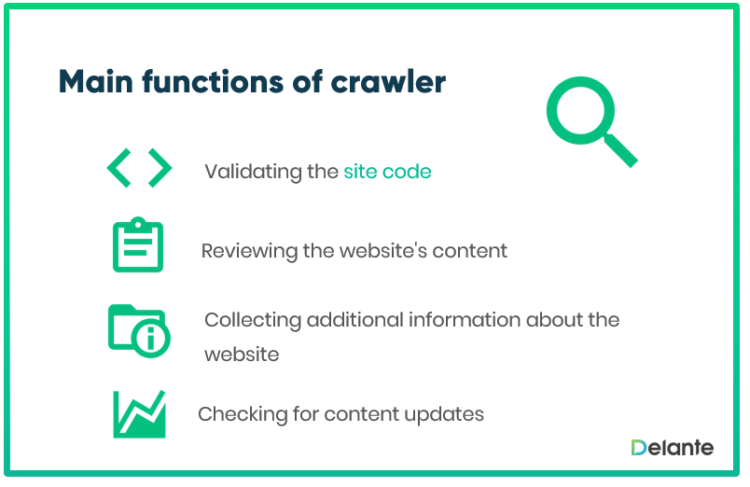

The main functions of crawlers are:

- to validate the site code,

- to review the website’s content,

- to collect additional information about the site relevant to users,

- to check for content updates available on the Internet.

The most recognized crawler is Googlebot. It is also worth noting that crawling doesn’t always mean that a page is indexed and therefore displayed in the search results.