7 Ways to Make Google Crawl Your Website

If you want your content to be displayed in the search results, you have to remember about crawling. The Google index lists all the websites that have been visited by robots. While browsing your page, Google detects any new or changed content and updates the index. How to crawl a website in Google? Keep reading to discover 7 simple methods!

Table of contents:

- Crawling – What Is It And How Does It Work?

- How to Make Google Crawl My Website?

- Crawl Budget

- How to Crawl a Website in Google – The Takeaway

Crawling – What Is It And How Does It Work?

Crawling is the process of integrating new websites into the Google search engine index. During this process, everything is determined by the applied tags, namely:

- index

- no-index

When talking about the first tag, The Google bot (also called a spiderbot, a web crawler, or web wanderer) visits your website, examines the source code, and then indexes it.

On the other hand, the no-index tag means that the page isn’t included in the web search index, therefore, it’s not displayed to users in the search results.

So, actually, it can be stated that when you browse the net, you actually browse the index, meaning Google’s database.

Before indexing the website, Google bots analyze various factors. They take into account elements such as keywords, content, correct source code or title, and alt attributes.

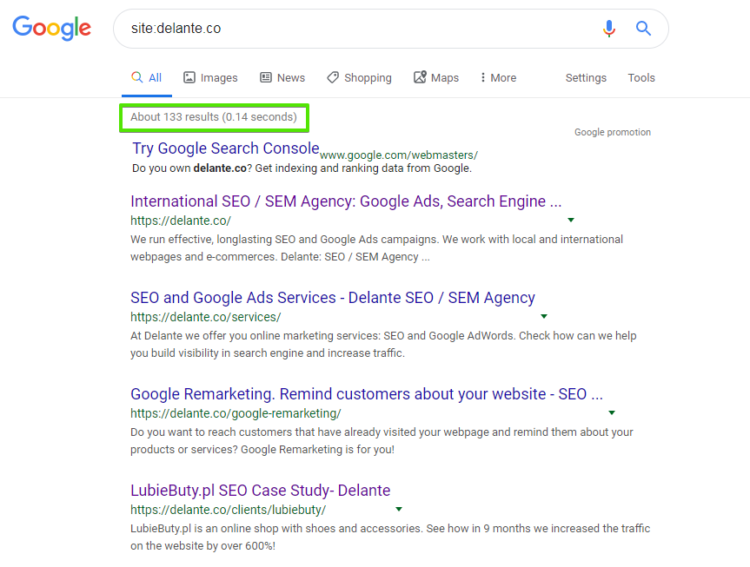

How to Check if Your Website Is Indexed?

To check the indexing status of a specific link, such as a profile, just enter it into the search engine. If it’s displayed in the search results, it means that your website has been indexed.

If you wish to check the indexing of a whole website or blog and the number of new indexed subpages, just type in the following command:

„site:http://websitename.com”

Website Indexing

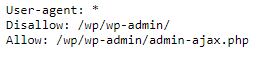

There are a few waysto make Google crawl a website. The first thing you need to do is to check if the robots.txt file allows Google robots to properly index your page.

Robots.txt is a file responsible for communicating with the robots that index your website. This file is the first thing checked by Google robots after entering a page and it can be used to show them how to index your site.

Wondering how to make Google crawl your website? Let’s delve into details!

How to Make Google Crawl My Website?

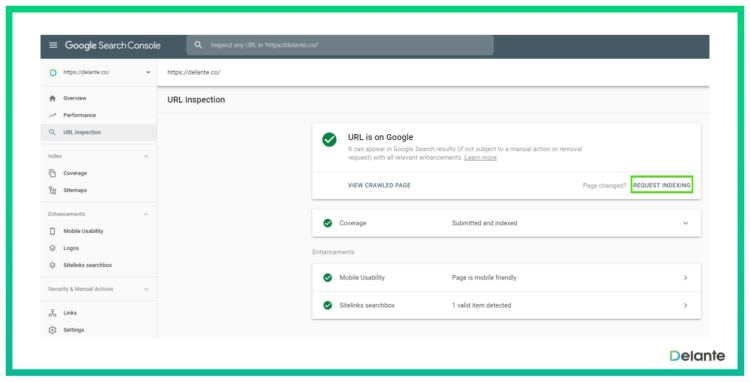

1. Adding the Website to Google Search Console

It’s the quickest and easiest way to index your website – it takes only up to a few minutes. After this time your website becomes visible on Google. Just paste your website address into the indexing box in Google Search Console and click request indexing.

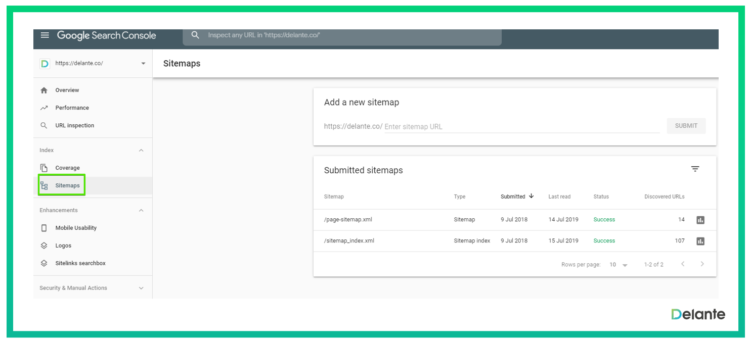

2. Using XML Maps

The XML map is designed specifically for Google robots. Since it significantly facilitates site indexing, every website should have it. The XML map is a database of all information about URL addresses, subpages, and their updates.

Once you manage to generate an XML map of your website, you should add it to the Google search engine. Thanks to it, Google robots will know where to find a particular sitemap and its data.

Use Google Search Console in order to send your XML map to Google. Once the map is processed, you’ll be able to display statistics concerning your website and various useful information about errors.

3. Using a PDF file

Texts in PDF are more and more frequently published on various websites. If the text is in this format, Google may process the images to extract the text.

How do search engine robots treat links in PDF files? Exactly the same way as other links on websites as they provide both PageRank and other indexing signals. However, remember not to include no-follow links in your PDF file.

In order to check the indexing of PDF files, you need to enter a given phrase accompanied by „PDF” in Google.

PDF is just one of many types of files that can be indexed by Google. If you want to find out more, go to: https://support.google.com/webmasters/answer/35287?hl=en

4. Using Online Tools

It’s a basic and very simple form of indexing.

There are various tools that enable doing it, however, most of them are paid or have a limited free version. Indexing with the use of online tools is important, especially when talking about links and pages you don’t have access to. By indexing them, Google robots are able to freely crawl them.

You can use one of the following online indexing tools:

- http://www.linkcentaur.com

- https://black-star.link/

- http://www.instantlinkindexer.com/

- http://www.indexification.com/

5. Link Building

Both internal and external dofollow links are extremely important tools you can use to guide Google robots and to encourage them to visit (and thus index) particular subpages.

When you provide internal links to product or service categories, blog posts, and other important elements, robots crawling your page will see and index them.

Read more about best practices for successful internal linking.

The same applies to dofollow external links. If other websites link to you, it’s a sign to Google that it should visit your page. Moreover, quality backlinks have a positive impact on important parameters like PA (page authority) and DA (domain authority) that affect positions in the SERPs and show the search engine that your page is of high quality.

Get a free SEO on-page checklist and check if your website is SEO and Google-friendly! Boost your visibility with the best interactive tool on the web!

6. Indexing Through Social Media Shares

Although nofollow social media links don’t have a direct impact on website positions in the search results, they help you increase your brand recognition, allow you to distribute your content via various channels, improve your online visibility, and show Google robots that your posts are useful and appreciated by users.

In 2017, Google’s Gary Illyes said that:

The context in which you engage online, and how people talk about you online, actually can impact what you rank for.

Therefore, social media links can support SEO and indexing.

7. Excluding Low-Quality Pages From Indexing

Making sure that important subpages of your website are indexed is as important as making sure that common, invaluable website elements aren’t analyzed by Google robots.

If you want to save your crawl budget (we’ll discuss it in detail below), you can use noindex tags and nofollow links to prevent Google robots from crawling given subpages.

It’s a good idea to use noindex tags and nofollow links when talking about:

- Subpages with terms and conditions,

- Subpages with privacy policy,

- Shopping carts,

- External links,

- Social media links.

Crawl Budget

Crawl budget is a resource for indexing your website.

More specifically, crawl budget is the number of pages indexed by Google robots during a single visit to your site. The budget depends on the size of your website, its condition, errors encountered by Google, and, of course, the number of backlinks to your site.

Robots index billions of subpages every day, so every visit to the site burdens some of the owner’s and Google’s servers.

There are two parameters that have the most noticeable impact on the crawl budget:

- crawl rate limit – limit of the indexing factor

- crawl demand – the frequency at which the website is indexed

Crawl rate limit is a limit that has been set so that Google doesn’t crawl too many pages in a given time. It should prevent the website from being overloaded as it refrains Google from sending too many requests that would slow down the speed and the loading time of your site.

However, the crawl rate limit may also depend on the speed of the website itself – if it’s too slow then the entire process is also slowed down. In such a situation Google is able to examine only a few of your subpages. The crawl rate limit is also influenced by the limit set in Google Search Console. The website owner can change the limit value through the panel.

Crawl demand is about technical limitations. If the website is valuable to its potential users, Google robots are more willing to visit it. There is also a possibility that your website won’t be indexed even if its crawl rate limit is higher. This may happen due to two factors:

- popularity – websites that are very popular with users are frequently visited by Google robots.

- up-to-date topicality – Google algorithms check how often the website is updated.

How to Crawl a Website in Google – The Takeaway

There are numerous ways to make Google crawl your website. The most popular ones include:

- website indexing with the use of Google Search Console,

- XML maps,

- website indexing with PDF files,

- website indexing with the use of online tools,

- website indexing with XML maps,

- website indexing with link building.

While indexing your site, you need to take into account several factors that will make it easier for you to achieve the best possible results. These factors include:

- meta tags,

- the robots.txt file,

- crawl budget.

Make sure that your website is crawled by Google regularly – if you add new elements to your website or update older elements it’s important that Google sees it. Without a properly crawled and indexed website, no amount of great content or SEO efforts will work if Google is not aware of them.

This is an update of an article published in 2019.