Traffic Bot in Google Analytics – How to Block It Out?

Google Analytics is the most important source of information about website traffic. However, sometimes the information can be distorted by bots. How to avoid it? How to block bots from Google Analytics? Keep reading and find out!

Unfortunately, a scenario when the bot messes up with Google Analytics data happens quite often. The important thing is to realize that something’s wrong, catch the anomalies in the statistics, and block the bot so that the data obtained from the tool is the most accurate and useful business-wise.

During the last couple of days, the bot problems occurred on many websites. Suspicious traffic can come from addresses like:

- /bottraffic.live

- /trafficbot.live

- /bottraffic.live

- /trafficbot.live

- /bot-traffic.xyz

- /bot-traffic.xyz

How To Check If Traffic Bot Visited Your Website?

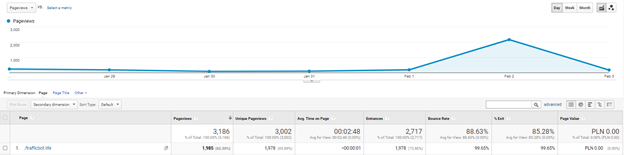

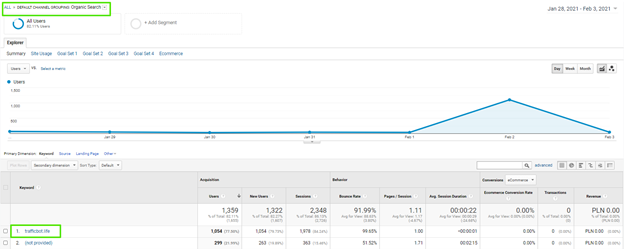

If you noticed any anomaly in the website traffic (such as presented in the picture above), you may suspect a bot has hit your site. You can confirm that in 2 places within Google Analytics:

1. Go to “Acquisition” -> “Organic Traffic”

2. Go to “Behavior” -> “Site Content” -> “All Pages” or “Landing Pages”

If you notice any significant traffic from the above-mentioned addresses your site has been hit by a bot! Now, it’s time to eliminate it so it won’t mess with your stats anymore.

How to Exclude Bot Traffic from Google Analytics?

1. Bot removal from the data already collected

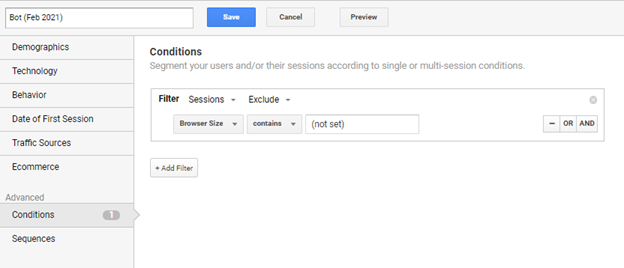

Creating a new filter won’t do if you want to analyze data already collected within GA – it will only work with future data. In this case, the best way is to create a segment, that will remove the bot data from the display.

- Click on “Add Segment” at the top of Google Analytics

- Configure the excluding segment. “Conditions” -> Exclude sessions where the browser size contains (not set).

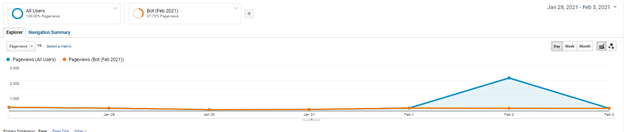

After saving the segment, you can see traffic data that excludes bot data or comparing all data with the excluded ones.

2. Creating a filter that will prevent collecting bot data in the future

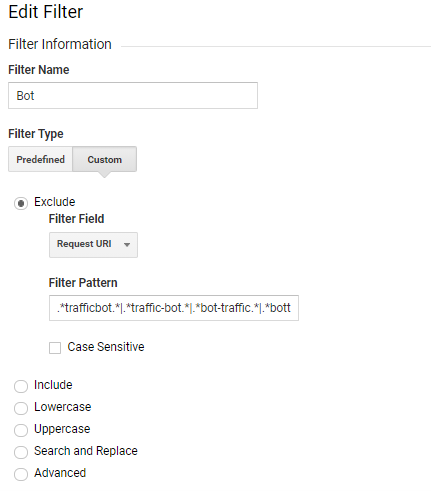

- Go to “Admin” -> “View” -> “Filters” -> “Add Filter”

- Choose “Custom” filter-> filter field “Request URI” -> type in the filter pattern: .*trafficbot.*|.*traffic-bot.*|.*bot-traffic.*|.*bottraffic.*

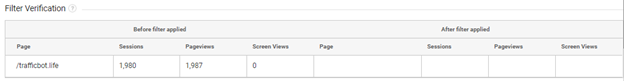

- Test the filter

I recommend first creating the filter in a Google Analytics test view and then adding it to the main Google Analytics account if everything works fine.

3. Blocking bot in robot.txt file

Blocking the bot in the robot.txt file will make it impossible to enter your website. To do this, you need to add this code to your robot.txt file:

user-agent: bot-traffic,bottraffic,trafficbot

disallow: /

The above exclusion will work only on this specific bot – trafficbot, bottraffic lub bot-traffic. If your website will be hit by the differently named bot, you will need to adjust the blocking procedure similarly.

Great! That’s exactly what I need – a clear guide on how to deal with this…bot 😉

Hi,

When entering the filter pattern, Google Analytics is saying ‘Regular expression is invalid’.

There is a . missing at the beginning, it should be

.*trafficbot.*|.*traffic-bot.*|.*bot-traffic.*|.*bottraffic.*

You need to start with a . -> then the regex will work

.*trafficbot.*|.*traffic-bot.*|.*bot-traffic.*|.*bottraffic.*

The . at the beginning, will solve this, otherwise the regex code starts with the repeat of nothing (which is the first *) and that’s invalid. Now it will start with any digit (.) and then repeat that (*) etc.

Enjoy!

Hey! Right, thanks for this comment, I have changed it in the article 😉

So I did this and my paid traffic in Datastudio was also segmented out?

Following bot shouldn’t affect your paid traffic so the data should’t change.

It’s a wonderful solution. I think everyone should use this method to prevent site from fake traffic. Robot.txt option is good.