Why Did My Website Drop Out of Google’s Search Results? The Most Common Developer SEO Mistakes

You’re fully engaged in the process of running your website. You spend a lot of time creating valuable content and suddenly it turns out that the site isn’t displayed in Google. At first you may think that being indexed out is a search engine penalty. Don’t worry – it happens very rarely. Actually, it may turn out that the problem is caused by your developer.

Table of contents

- How to check if a website is indexed in Google?

- The most common developer SEO mistakes that cost you the ranking

How to check if a website is indexed in Google?

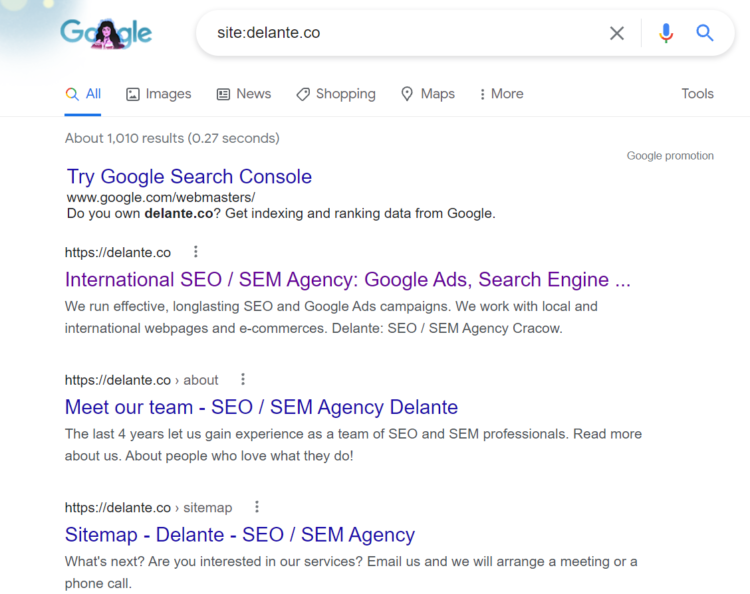

To notice that your website has been removed from the search results, first you need to know how to check it. Even if the site isn’t displayed in the SERPs when you type in a selected keyword, it doesn’t necessarily mean that it has been indexed out. For some reasons, Google algorithms may have decided to decrease your website visibility but they still take it into account, even though it’s not ranked high. If you want to easily check your indexation, enter the following query site:websiteaddress.com.

When I typed in site:delante.pl, Google displayed such results:

If it turns out that your website isn’t shown in the ranking or there are fewer and fewer results, it’s a sign that there is a problem. What should you do? Try to determine the cause of this state of affairs.

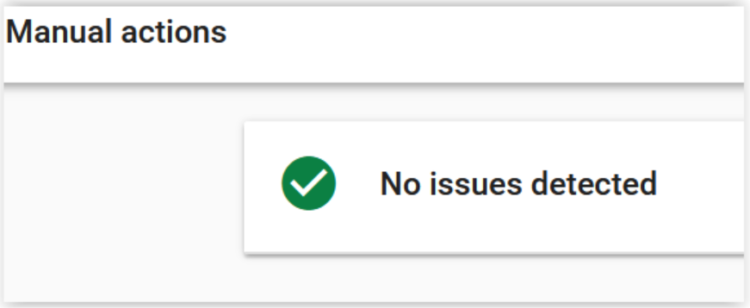

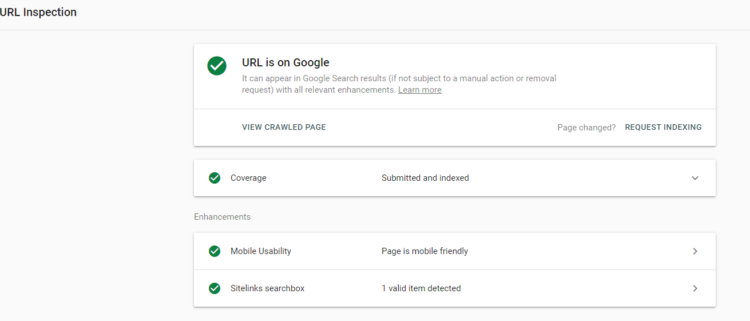

Start with checking Google Search Console. First, it’s crucial to verify the website by placing the appropriate code in the <head> section. Once it’s done, use Google Search Console to ensure that the search engine hasn’t taken any manual steps against your page. What do we mean by that? Well, actually a Google specialist responsible for website verification may come to a conclusion that your site doesn’t meet the search engine’s quality guidelines and, as a consequence, its position needs to be decreased or the page has to be indexed out.

How did that specialist end on your page? Perhaps it was an accident, perhaps someone reported that the site isn’t in line with the guidelines. A website may drop out of Google’s search results if it’s considered spam. This can happen if your page is filled with automatically generated content or texts copied from other online sources. Nevertheless, in most cases there is a completely different cause of the problem and you’ll see the following message:

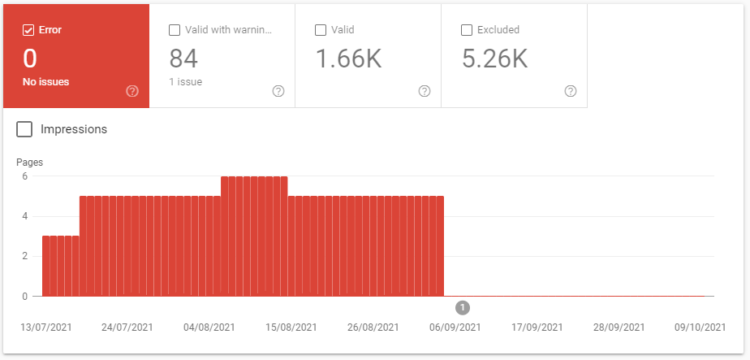

Then go to Google Search Console and enter the status tab to see information about the number of indexed subpages and potential mistakes:

Here, you can also find out why a given subpage has been indexed out – e.g. if you click a notification saying that the page contains a redirect, you’ll see which URLs are affected by the problem.

It’s a fine way to discover the cause of indexing problems. In many cases, they result from a mistake made by a developer. Wondering whether it’s possible to fix issues on your own? If you aren’t an expert in the field, contact a specialist and explain your problem to solve it effectively.

The most common developer SEO mistakes that cost you the ranking

There might be a number of reasons explaining why your website disappeared from the search results. Below you can see a list of a few most common developer SEO mistakes that can lead to it.

The noindex tag

at the stage of website creation, developers frequently block the page from being indexed by the search engine robots. They want to ensure that the ready-made version appears in the search results. For this reason, they use the noindex tag that stops Google bots. What does such page code look like? It’s in the <head> section of the website and can be:

<meta name="robots" content="noindex"> – if you want to block most search engine robots,

<meta name="googlebot" content="noindex"> – if you want to block only Google robots.

Our experience shows that such a tag may appear on the site accidentally. Then, it’s enough to remove it and wait a while – the website should be gradually indexed again. How to check if the noindex tag is the reason for the problem? Check the source of the page. Click the right mouse button and select the appropriate option. In Chrome, this is “View Page Source”. Then use CTRL+F to run the search function and type “noindex”. If you find content similar to the above one, delete it.

Blocked .htaccess file

The .htaccess file is analyzed every time the page is loaded, therefore, any modifications bring results immediately. The blocking code may vary. To evaluate whether the problem stems from the blocked .htaccess file, check Google Search Console. Benefit from the “Check any URL in…” function. If the site can be retrieved successfully, you need to keep looking for the cause of the problem.

Blocked robots.txt file

This file can be used to block access to your entire site. It should be located in the main website directory, however, it doesn’t necessarily mean that your site has to have it. To check if it exists, enter the address: yourwebsite.com/robots.txt. If it opens, verify the content. If you find the following code there, it means that the site won’t be indexed:

Disallow: /

Change it to:

User-agent: *

Allow: /

Page removed from Google Search Console

Thanks to maps added to GSC you can not only index your website faster, but also access functionalities that help to speed up the process of removing subpages from the search engine. Why should you need such a solution? It’s helpful in situations when you don’t want given content to be indexed, but you forget to block it and it’s displayed in Google. The option also comes in handy if your site is hacked and filled with spam texts so you quickly need to delete them.

However, it’s crucial to use this functionality correctly. After logging into GSC, select ” Removals”, “New Request” and enter the URL you want to index out. If you enter the home page address and check “Remove all URLs with this prefix,” the entire site will disappear from Google! GSC will show you a warning:

Keep in mind that the process won’t start until you click “submit request”.

Note: if you have an HTTPS and HTTP version of a site added to GSC and you start removing the HTTPS version, the entire page will be indexed out as a consequence.

HTTP response code

Check if your URL returns the HTTP 200 code denoting that everything is fine. If it’s different, Google (in all probability) will not index the site. The 400 code informs about problems with the request, and the 500 one confirms that the request was accepted but is not successful due to an error on the server. How to fix the problem? Remove the cause of the error.

Content available for logged in users

Is your website filled with content, yet you decided that you’ll make it available only to those who decide to set up an account and log in? Unfortunately, Google robots won’t do it. As a consequence, they’ll index your page out when checking the URL.

Incorrectly implemented hreflang tag

This tag is supposed to inform search engines that a given site has various language versions. It comes in handy when your offer is addressed to different target groups and you want to ensure that it’s presented in an appropriate language tailored to the user. Incorrect application of this tag can be a reason why your website completely dropped out of google’s search result. To learn more, check out our blog entry: Hreflangs. How to tag language versions of your website?

Incorrectly implemented rel=canonical tag

If your page has a few subpages with the same content, Google won’t index all of them. Instead, it’ll select only one – not necessarily the one you care about. However, if you indicate a canonical link, the search engine should index the correct address. Problems start if you implement the tag incorrectly or don’t do it at all. In the latter case, duplicate content may be indexed out. Remember that canonicals are crucial when talking about websites with multiple language versions – without them, the abovementioned hreflangs may not be analyzed properly.

Can you share any other situations when Google may index websites out? Let us know in the comment section below!