How to Optimize Your Crawl Budget?

If you want to ensure appropriate visibility of your website, make sure that it’s indexed by Google robots. For this purpose, it’s also important to focus on the crawl budget to improve your website’s indexing and positions in the search results. Keep reading to find out what to do to effectively optimize your crawl budget!

Crawl budget – what is it?

Due to the fact that the indexing robots of the most renowned search engine scan millions of subpages every day, there are some restrictions that are supposed to enhance the operation of the bots and at the same time reduce the use of computing power. That’s why appropriate optimization based on SEO principles can translate into higher positions on Google.

Why is it worth taking care of the crawl budget?

In the case of websites with many subpages that have different URL addresses, the indexing process won’t take long. On the other hand, a website with a few thousands of subpages and regularly published content may be an obstacle. This is the moment when the crawl budget comes in handy and helps you take appropriate steps to improve website operation and indexation.

The crawl budget is affected by several factors:

- crawl rate limit – a limit of the number of subpages visited by the search engine robots over a short period of time;

- crawl demand – the need to re-index the page as a result of e.g. more frequent content updates and growing popularity;

- crawl health – meaning short server response time;

- page size – number of subpages;

- subpage weight – the JavaScript code requires using more crawl budget.

Phases of crawl budget optimization

Enable indexing your most important subpages in the robots.txt file.

This is the first and at the same time the most important stage of the optimization. The task of this file is to provide search engine robots with information about files and subpages that are supposed to be indexed. You can manage your robots.txt file manually or benefit from special audit tools.

It’s simply enough to place robots.txt in a selected tool (if possible). This will allow the site owner to accept or block indexing of any selected subpage. The last step is to upload the edited document.

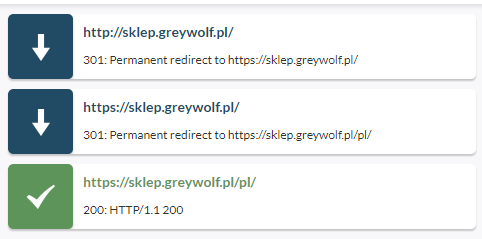

Watch out for redirect chains

Being able to avoid at least one redirect chain on your website is a huge success. In the case of sizable pages, it’s almost impossible not to come across 302 or 301 redirects.

However, you should be aware that at some point, redirects creating a chain may stop the indexation process. As a result, the search engine may not index the subpage the user cares about. One or two redirects shouldn’t hinder proper website operation. Nevertheless, it’s still worth staying on your toes in such situations.

Use HTML as long as possible

Now it’s possible to state that Google indexing robots became capable of indexing JavaScript, Flash, and XML languages. It also needs to be taken into account that, so far, there are no competitive search engines in this respect. That’s why, as long as possible, you should continue using HTML. This guarantees that you won’t disturb Googlebot’s work.

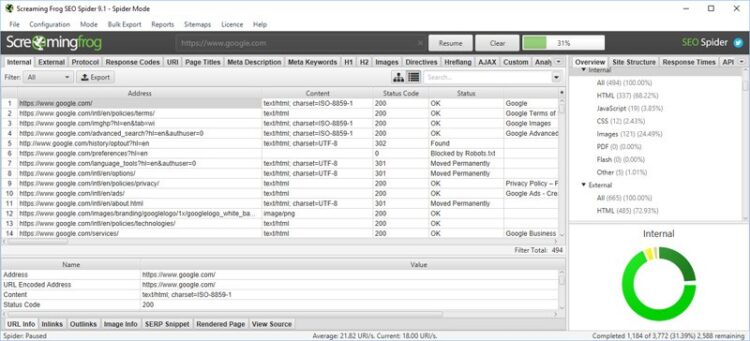

Don’t let errors waste your crawl budget

404 and 410 errors have a negative impact on your crawl budget. If this argument doesn’t convince you too much, think about how they affect the User Experience.

Therefore, it’s recommended to fix all 4xx and 5xx status codes. In this case, it’s advisable to use tools like Screaming Frog or SE Ranking that enable conducting a website audit.

Take care of appropriate URL parameters

Remember that separate URLs are perceived and counted by Google robots as separate websites which leads to wasting the crawl budget. Notifying Google again about these URL parameters will help you save your crawl budget and avoid possible duplicate content.Don’t forget to add URLs to your Search Console account.

Update the sitemap

Thanks to it, it’ll be easier for the bots to understand your internal links. The appropriate configuration of the sitemap is another key to success.

Apply canonical URLs

It’s recommended to use URLs described as canonical for the sitemap. Moreover, it’s necessary to check whether the sitemap is in line with the most recently uploaded version of the robots.txt file.

Hreflang tags pay off

When analyzing websites with various language versions, Google robots benefit from hreflangs. Inform Google about the localized versions of the page as accurately as possible.

First of all, start from typing <link rel=”alternate” formula hreflang=”lang_code” href=”url_of_page” /> in the header of the managed page. The “lang_code” is the code referring to the supported language version. Finally, don’t forget about the <loc> element for a given URL. It enables indicating the localized versions of the site.

How to increase your crawl budget?

- Build appropriate website structure and get rid of any errors,

- Use the robots.txt file to block all elements that aren’t supposed to appear on Google,

- Limit the number of redirect chains,

- Obtain more links to improve your PR,

- Use Accelerated Mobile Pages (AMP).

The use of Accelerated Mobile Pages (AMP) has both advantages and disadvantages. The list of bright sides includes better conversion rates, increased visibility of the published content, and special tags. On the other hand, high costs, a lot of work needed to implement them, and restrictions concerning the use of JavaScript language have to be mentioned as the main drawbacks.

The takeaway

Optimizing your crawl budget brings many benefits when it comes to improving the visibility of your online store. After implementing all of the abovementioned tips, e-commerce owners can obtain surprising results. Making changes in your crawl budget may have a positive impact on the indexing process. The positions of indexed subpages will increase which translates into the possibility to improve sales of your products and services. Obviously, all of these are determined by the amount of devoted time and appropriate work.

Well explained, thanks Przemek! I always struggled with understanding the concept of crawl budget, this helped 😉

wonderful explanation and understood the concept if crawl budget.